Computational Methods

Background and Motivation

To make relevant predictions using a computer, it is necessary to use computational methods, that is, algorithms for simulating systems of interest or calculating properties thereof. While today it is easy to use supercomputers, even very large ones, to capacity, it is not easy to do so in an intelligent way. A major hurdle for the simulation methodologies we employ routinely (primarily molecular dynamics) is their lack of scalability. This means that it is not possible to speed up the calculation arbitrarily by using more resources in parallel. Many real phenomena we are interested in, like protein aggregation or ligand binding, are associated with slow time scales, and this is where so-called advanced sampling methods come into play. An even more ubiquitous hurdle is the accuracy of computational models and the approximations they introduce, and there is an inevitable caveat that these model undergo continuous refinement.

Our Approach

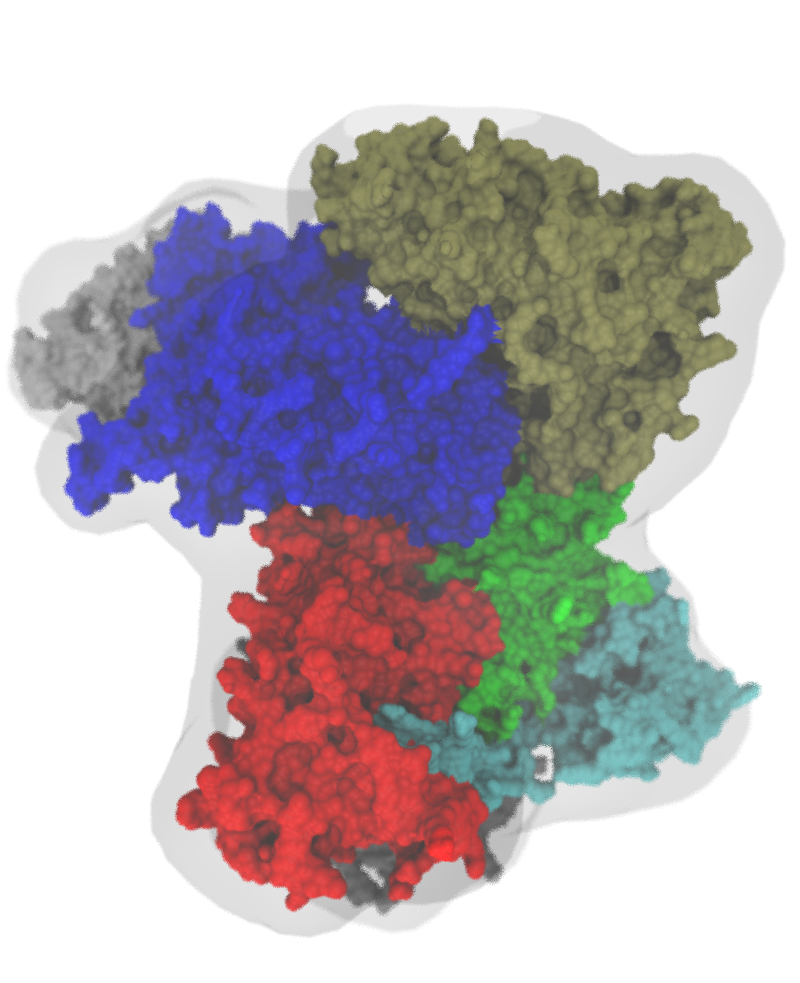

The lab has a long-running interest in reducing the cost of calculating the interactions in a single molecular dynamics time step. Two important implicit solvent models (the SASA and FACTS models, respectively) were developed in an effort that benefited synergistically from the simultaneous development of the energy functions underlying our in-house docking software, in particular SEED. Implicit solvent models are a type of coarse-graining that eliminate explicit water molecules from otherwise particle-based simulations. More dramatic coarse-graining strategies were employed to study fibril formation of amyloidogenic peptides. More recently, we have taken an interest in self-guided, parallel simulation strategies (free energy- and progress index-guided sampling), projects that went hand-in-hand with our efforts in data science. An approach we have pursued to improve the quality of models is, aside from experimental validation, to incorporate measurement signals directly into simulations (e.g., from NMR or electron microscopy).